Introduction: Why Interconnect Choice Matters for AI?

Training modern AI clusters is not only about powerful GPUs. The network that connects those GPUs often decides how well the cluster performs. If the network is too slow or unstable, GPUs will spend more time waiting for data than doing calculations.

This is why choosing the right interconnect - the fabric that links servers, storage, and accelerators—is critical. The two main options are:

- Ethernet: the universal network standard, used everywhere from homes to hyperscale data centers.

- InfiniBand (IB): born in the world of high-performance computing (HPC), optimized for extremely low latency and lossless communication.

Both can build AI clusters, but they behave very differently. Let’s look at how they compare.

All direction of comparison between Ethernet & InfiniBand

Quick Primer on Ethernet and InfiniBand

Ethernet

- Created in the 1970s, now the default for almost all networks.

- Commodity hardware, broad ecosystem, huge supplier base.

- Scales from 1G → 400G, with 800G Ethernet starting to appear.

- Supports RDMA through extensions (RoCE v2).

InfiniBand

- Designed for HPC clusters in the late 1990s.

- Focus: ultra-low latency, deterministic performance.

- Ecosystem mainly driven by NVIDIA (Mellanox).

- Current speeds: 100G → 400G (HDR, NDR, XDR).

- RDMA is native and built into the protocol.

👉 In short: Ethernet = everywhere, flexible, cost-effective. InfiniBand = specialized, high-performance, purpose-built for clusters.

Performance Comparison: Latency, Bandwidth, Jitter

Ethernet vs InfiniBand Performance

| Metric | Ethernet (RoCE v2) | InfiniBand |

| Latency | ~10–50 µs (after tuning) | ~1–2 µs (native) |

| Jitter | Higher, depends on tuning | Very low, deterministic |

| Bandwidth | 1G → 400G (800G emerging) | 100G → 400G (NDR/XDR live) |

| Congestion Mgmt | PFC + ECN (complex to set up) | Credit-based, lossless by design |

| Ecosystem | Broad, commodity gear | Specialized, NVIDIA-centric |

Key takeaway: InfiniBand is faster and more predictable, but Ethernet covers a wider range of needs and has broader vendor support.

Scalability and Ecosystem

Ethernet

- Works with everything: servers, switches, routers, storage.

- Easier to scale in mixed environments (e.g., combining AI training with enterprise workloads).

- Supported by countless tools and monitoring platforms.

InfiniBand

- Scales well in homogeneous HPC/AI clusters.

- Managed by Subnet Manager (SM), specialized to IB networks.

- Strong support for collective operations like AllReduce (common in AI training).

- Smaller ecosystem, but highly optimized for specific workloads.

👉 Ethernet is general-purpose. InfiniBand is cluster-specific.

Cost and Availability

- EthernetLower hardware costs. More suppliers = competitive pricing. Easy to find engineers who understand Ethernet.

- InfiniBandHigher upfront costs (NICs, switches, cables). Specialized knowledge needed for deployment and troubleshooting. ROI can be higher for large AI training clusters because GPU utilization improves.

👉 If cost is key, Ethernet usually wins. If time-to-train is critical, InfiniBand often pays for itself.

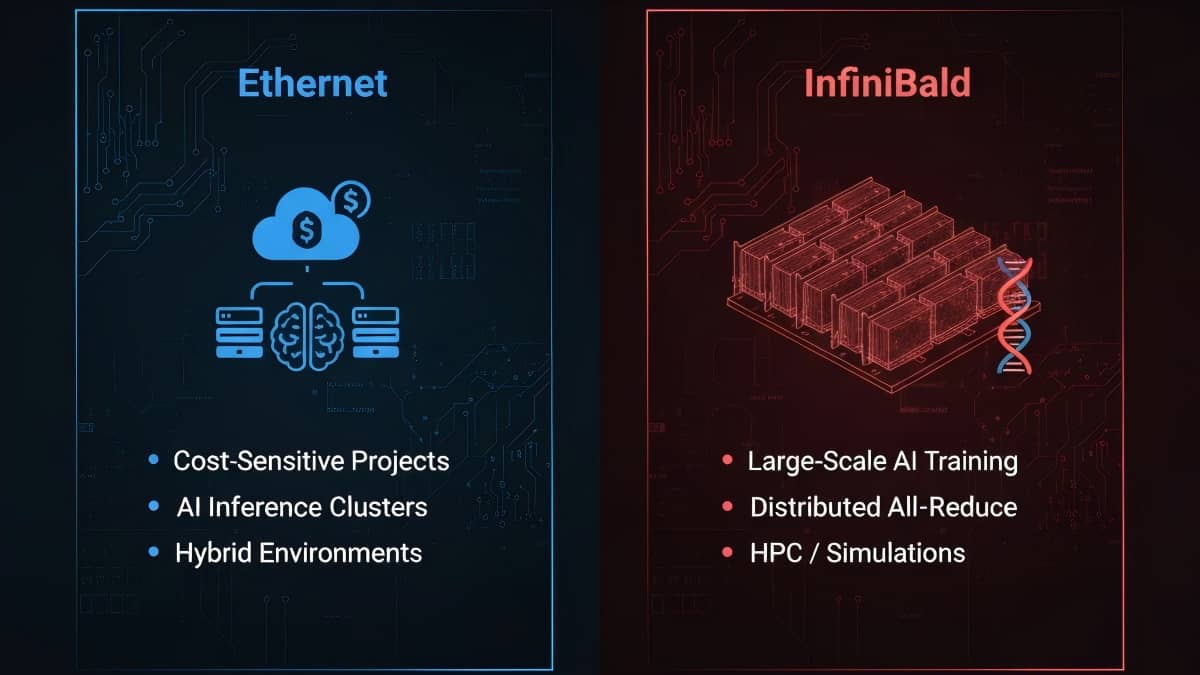

Use Cases in AI Clusters

Ethernet

- Cost-sensitive projects: startups, research labs with limited budget.

- Inference clusters: workloads that need throughput but not nanosecond latency.

- Hybrid environments: easy integration with existing enterprise DC networks.

InfiniBand

- Large-scale AI training: hundreds or thousands of GPUs where latency dominates.

- Distributed training: workloads that rely on AllReduce or parameter sync.

- HPC applications: simulations, genome sequencing, weather forecasting.

👉 Many hyperscalers deploy both: Ethernet for general workloads, InfiniBand for critical AI/HPC jobs.

Deployment Considerations

RoCE over Ethernet

- Uses RDMA over Converged Ethernet.

- Needs PFC (Priority Flow Control) and ECN (Explicit Congestion Notification).

- Requires careful tuning—misconfigurations can cause congestion collapse.

InfiniBand

- Lossless by design, thanks to credit-based flow control.

- Requires a dedicated fabric (separate from Ethernet).

- Managed with Subnet Manager and special drivers.

Cabling

- Ethernet: RJ-45 for ≤10G, SFP+/SFP28/QSFP for 10G–400G.

- InfiniBand: QSFP form factors, DACs, AOCs, SMF/MMF optics.

👉 Ethernet integrates more easily into existing DCs. InfiniBand requires parallel infrastructure but simplifies performance tuning.

Future Outlook

Ethernet

- The Ultra Ethernet Consortium (UEC) is working to close the gap with InfiniBand, aiming for sub-µs latency and better congestion control.

- 800G Ethernet is on the horizon.

InfiniBand

- Continues to push the edge in HPC and AI.

- 400G NDR and 800G XDR already in advanced deployments.

Likely future

- Ethernet will dominate most enterprise AI deployments due to cost and ecosystem.

- InfiniBand will remain the choice for extreme-scale AI/HPC clusters.

FAQs

Q1: Why is InfiniBand latency lower than Ethernet?

A: Because InfiniBand eliminates multiple protocol layers and uses credit-based flow control, avoiding pauses and retries.

Q2: What’s the difference between RoCE and iWARP?

- RoCE: RDMA over Ethernet (UDP-based). Low latency, but needs PFC/ECN tuning.

- iWARP: RDMA over TCP. Easier to deploy but higher latency, less common in AI.

Q3: Can Ethernet replace InfiniBand for 1000+ GPU clusters?

A: Yes in theory, but tuning becomes very complex. InfiniBand is usually chosen for predictability.

Q4: Which affects AllReduce performance more—bandwidth or latency?

A: Latency. AllReduce involves many small messages; IB’s nanosecond-level latency helps.

Q5: How does InfiniBand’s Subnet Manager work?

A: It configures routes, assigns addresses (LIDs), and ensures lossless forwarding within the IB fabric.

Q6: Will UEC Ethernet catch up with InfiniBand?

A: It’s possible for many workloads, but InfiniBand may still lead in deterministic low-latency.

Q7: Why is congestion tuning harder on Ethernet?

A: Because Ethernet was designed as a lossy protocol. Lossless RDMA (RoCE) requires careful switch tuning.

Q8: Can Ethernet and InfiniBand optics/cables be reused across fabrics?

A: Not directly, form factors (QSFP) are similar, but encoding and firmware are fabric-specific.

Q9: Why do some cloud providers choose Ethernet, others InfiniBand?

A: It depends on workload mix: AWS uses custom offload cards over Ethernet; NVIDIA DGX SuperPOD uses InfiniBand.

Q10: Is InfiniBand worth it for SMB AI labs?

A: Usually no. A well-tuned 25G/100G Ethernet fabric is cost-effective and “good enough” for small-scale AI.

Conclusion

Ethernet and InfiniBand both power AI clusters, but they shine in different places:

- Ethernet: broad ecosystem, lower cost, easier to integrate. Ideal for SMBs, enterprise AI, and inference.

- InfiniBand: ultra-low latency, deterministic, and tuned for AI training and HPC at massive scale.

👉 The decision comes down to scale, budget, and workload sensitivity. For the best results, ensure end-to-end consistency - NICs, switches, optics, and cables must align.

For practical deployment, platforms like network-switch.com offer compatible Ethernet and InfiniBand solutions, helping teams avoid mismatched hardware and unlock full performance.

Did this article help you or not? Tell us on Facebook and LinkedIn . We’d love to hear from you!

https://network-switch.com/pages/about-us

https://network-switch.com/pages/about-us